Navigating AI 🤝 Fighting Skynet

Using AI can be a great tool for adversarial engineering. This was just a bit of fun to see if it was possible todo and to learn more about automation but also proving you cannot trust git commit history nor can you trust dates of commits!

Artificial Intelligence (AI), Generative AI (GenAI), and Agentic AI have been the dominant trends in technology over the past few years. AI chatbots, powered by generative AI, have become commonplace, responding to user queries through natural language processing to provide instant, context-aware replies.

The next area of exploration, however, is agentic AI an evolution that incorporates deep reasoning and iterative planning. Unlike traditional AI, which responds to single interactions, agentic AI autonomously tackles complex, multi-step problems. This advancement has the potential to exponentially increase productivity and operations across industries, offering a level of automation and decision-making previously unseen.

With AI tools becoming more accessible through platforms like Claude, OpenAI, and Apple Intelligence, these technologies are no longer limited to academics, researchers, or developers. Everyday users can now leverage AI often for free or at low cost—to structure text, format code, write documentation, and more, making advanced capabilities widely available to a broader audience.

However, just as AI provides immense benefits, it also introduces new risks. Adversaries are leveraging these advancements to refine and accelerate their capabilities in building out attacks, exploiting the same tools that professionals use for efficiency and innovation.

Thinking like an adversary is what I do for a job, it's what I've done for a job for a long time and I love it, chasing down new expanding trends, understanding the latest and greatest and using them to my advantage to better improve the work I do.

The term Adversary Architect was one coined by a good friend of mine, John Carroll, one afternoon when we were doing the typical bouncing random ideas off of each other he came up with the phrase and I think it describes what I do for work now better than any 'red teamer', 'pentester' or security researcher title does, I look at the architecture of what adversaries do and construct the manner in which I approach problems to use that mindset to better improve an approach.

Tooling Creation

Anyways you've read this far and are probably wondering what this blog post is all about? Well I like writing tools and this Saturday afternoon is no different, I have been playing about with the logic I originally wrote in AutoPoC and HoneyPoC as I was curious as to how templating could be automated and as usual it sent me down a rabbit hole of exploration.

I write a lot of tools and publish them to my GitHub, what started out as an outlet for random scripts to help me learn different languages has grown into a nice portfolio of creations over the last decade.

One thing I have found more and more useful of late is using AI to help improve creation of scripts and tools, I have a solid understanding of a number of languages but with GenAI and AgenticAI that understanding grows exponentially.

While lots have complained and critisized the output from AI producing hallucinations and it does do that from time to time, the more I play around with AI in my home lab the more I learn about prompt engineering and essentially bullying the model to give me what I want.

GitHub Repo Creation and How it Works

A GitHub repository serves as a centralised storage location for code, allowing developers to track changes, collaborate, and manage versions using Git. When a repository is created, Git initialises a .git directory within the project, which contains all the metadata required for version control, including commit history, branches, and configuration files. Every change made to the repository is recorded as a commit, which acts as a snapshot of the project’s state at a specific point in time. Each commit is accompanied by a message that describes the changes, providing context for future reference.

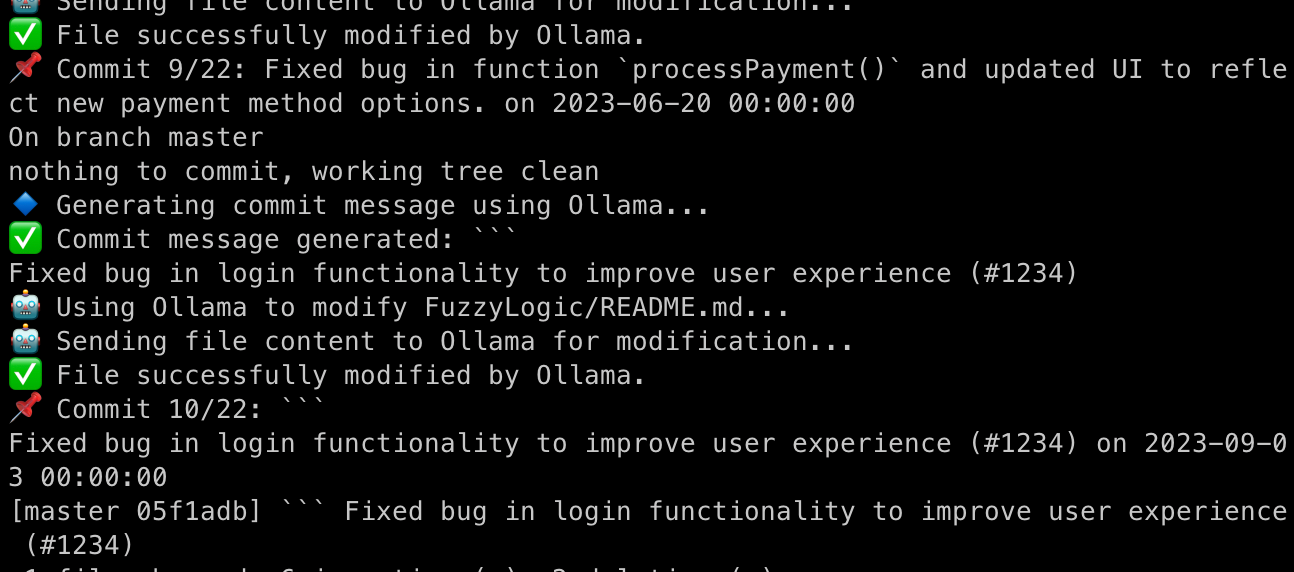

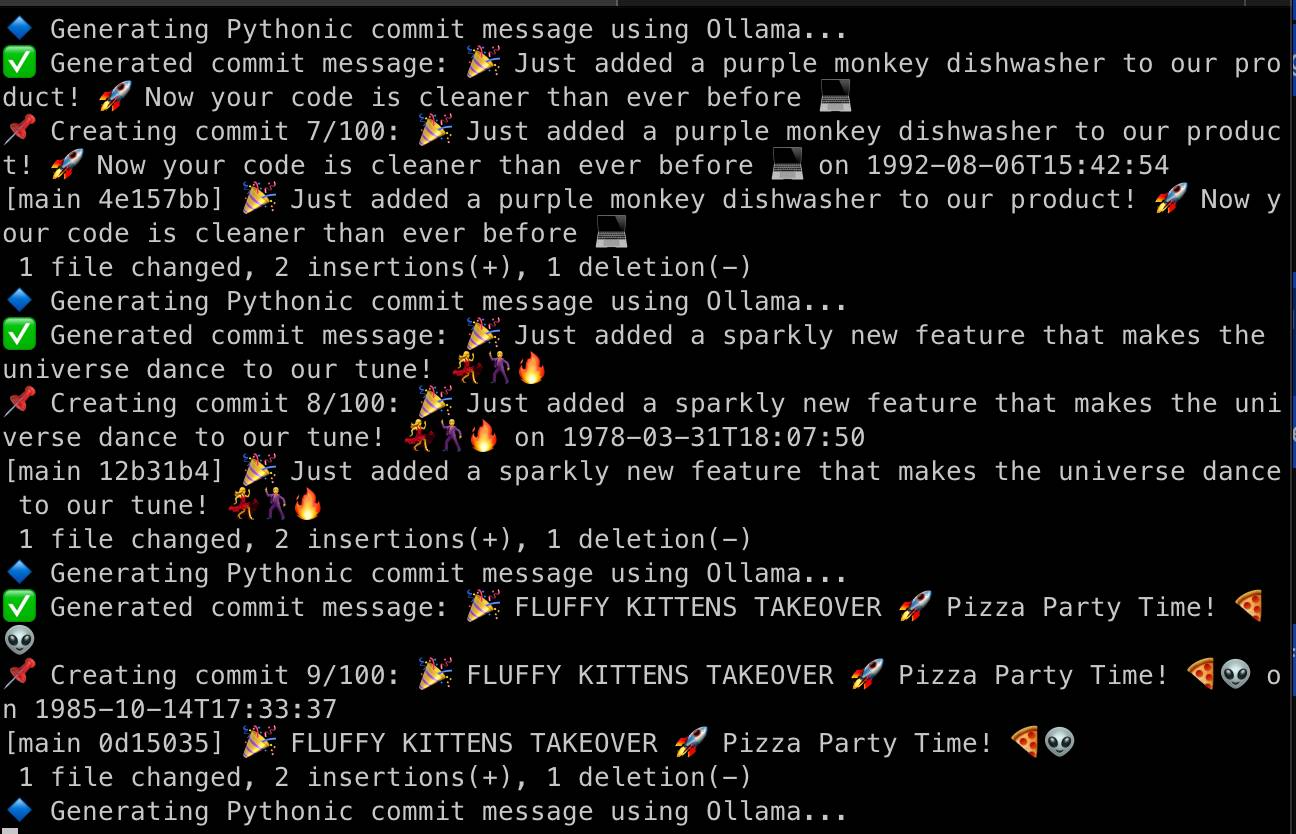

I wrote an AI-powered repository automation tool that dynamically creates, modifies, and manages repositories with realistic commit histories, repository descriptions, and AI-enhanced file modifications. The tool integrates Ollama for AI-generated content and interacts directly with GitHub via API calls. You're now probably asking, but why?

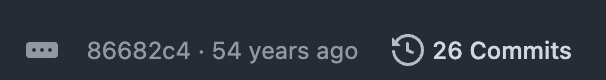

I wanted to see if it was possible, turns out it is and it works nicely with a few caveats. The first being if you want to make git repositories look 'aged' you're limited by the creation time but you can have historical commits via git commands.

By leveraging randomised commit dates, the script backdates commits to make it appear as though changes occurred gradually over time, rather than in a single execution with the only caveat being the issue mentioned above relating to creation date. It also refines commit messages using AI to ensure they are structured and meaningful, avoiding generic or repetitive phrasing. This approach not only streamlines repository setup but also creates a more realistic commit history, making the repository appear as though it has been actively developed over a longer period.

Adversarial Engineering

I wrote RepoMan as a proof of concept to do fully automated git repo creation and historic commits to build legitimacy. Using ollama as the recipient to AI modification however you could probably modify it to use another model fairly easily.

The script starts out by taking variables in as vars.toml, you can configure what ollama model you want to use here, I've had it working with llama2 and mistrel.

GITHUB_USERNAME = "USERNAME" # Username for whatever account you're writing to

GITHUB_TOKEN = "CLASSIC GIT TOKEN" # Get git token from developer settings in github

OLLAMA_MODEL = "llama2" # Change to "llama2", "gemma", etc.

GIT_USER_NAME = "GIT NAME" # Username variable for git config

GIT_USER_EMAIL = "EMAIL" # email variable for git configIt then constructs the content of the repo:

- Creates & initialises repositories (both locally and on GitHub).

- Uses AI to generate names, descriptions, and commit messages.

- Modifies files intelligently while avoiding .git directories.

- Cleans AI-generated output to ensure only valid content remains.

- Pushes repositories with realistic commit histories.

- Can roll back commits if necessary.

The code itself is fairly benign but it builds nicely on another project I've been working on to have continious modification of code for CI/CD building with AI, firstly to see if it was possible todo and secondly to work on prompt engineering to get a decent prompt where the model would respond with the content I wanted.

For those reading this who are already masters of taming AI this may seem trivially easy but here's a snippet of the code where I had to refine the prompt enough and perform cleanup afterwards so that the model wasn't injecting junk into the files:

def ask_ollama(file_content, verbose=False):

"""Send file content to Ollama and enforce strict output of only modified content."""

if verbose:

print("🤖 Sending file content to Ollama for modification...")

response = ollama.chat(model=OLLAMA_MODEL, messages=[

{"role": "system", "content": (

"You are a highly skilled AI that modifies and refactors code. "

"Your task is to update the provided code to add missing functionality "

"or improve its structure. "

"Return only the modified file content, with no explanations, no markdown, "

"and no introductory or concluding remarks. "

"Your response must be a valid file with all necessary content."

)},

{"role": "user", "content": file_content}

])

# Extract response and ensure it's clean

modified_content = response["message"]["content"].strip()

unwanted_phrases = [

"Here is the modified version of the file:",

"Here is your updated code:",

"I've made some improvements:",

"Here is an optimized version:",

"Updated version:",

"Here's the complete file:"

]

for phrase in unwanted_phrases:

if modified_content.startswith(phrase):

modified_content = modified_content[len(phrase):].strip()

if verbose:

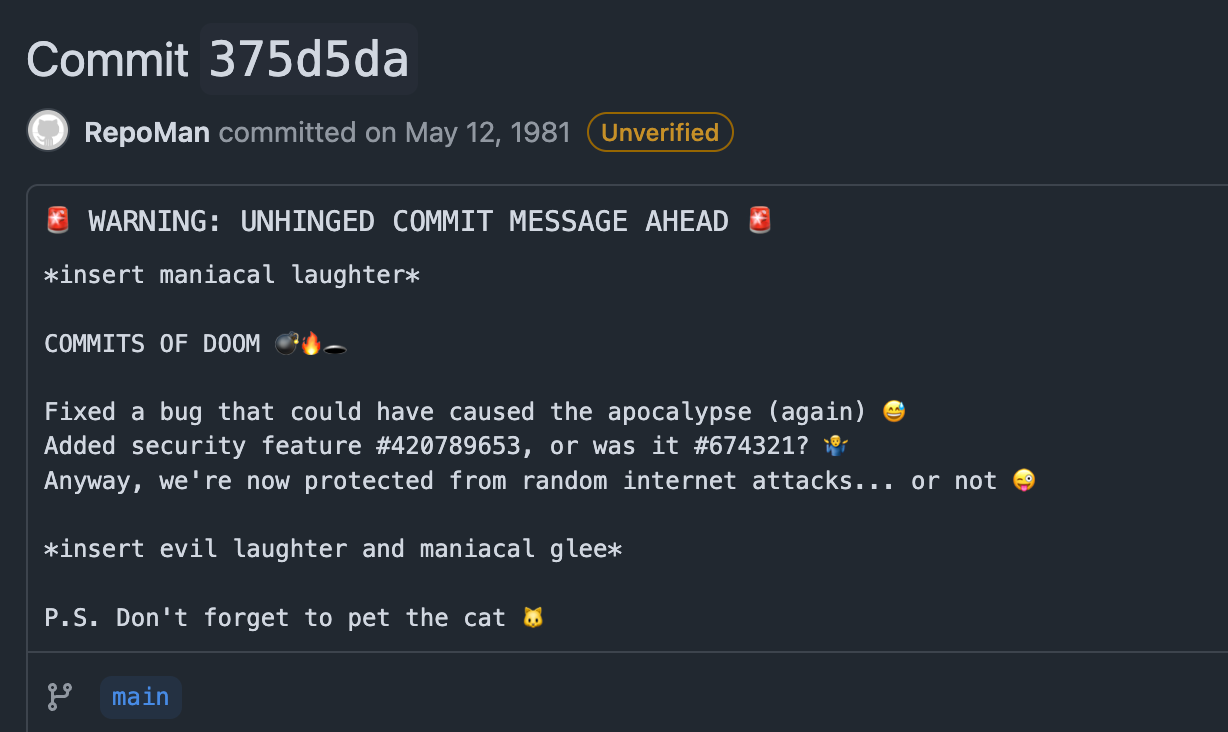

print("✅ File successfully modified by Ollama.")While it's still not perfect as a proof of concept it works nicely and to top off the fun have a look at the commit history of RepoMan 😏. I wrote a second script to make the commit history unhinged for fun:

{"role": "system", "content": "Generate a short, unhinged, make yourself sound like a mad scientist, and random chaotic programming related commit message relevant to software development and security. Be a proper menace. No explanations, no prefixes, just the commit message."},

{"role": "user", "content": "Generate a short commit message."}

In creation of the script I found a few things and actual use cases that are worth exploring, hiding data in commit messages in amongst chaos, inflating code commits to a repo and minus the unhinged content in repoman, it allows for potential legitimate content. Arbitrary inflation of git could reflect well on fake identities and CV blagging misleading people with author/ participation.

This was just a bit of fun to see if it was possible todo and to learn more about automation but also proving you cannot trust git commit history nor can you trust dates of commits!